Network Latency: How to reduce it?

Network latency affects the efficiency and performance of technology connected to the internet. From gaming to professional correspondence, network latency can affect efficiency and effectiveness. If you regularly use a device connected to the internet for personal or professional reasons, you may want to learn more about what network latency is and how you can decrease it.

What is Network Latency?

Network latency, sometimes called network lag, is the time it takes for a request to travel from the sender to the receiver and for the receiver to process that request. In other words, latency meaning in networking refers to the time it takes for the request sent from the browser to be processed and returned by the server.

When communication delays are small, it’s called a low-latency network, and longer delays, a high-latency network. Any delay affects website performance.

Consider a simple e-commerce store that caters to users worldwide. High latency will make browsing categories and products very difficult and in a space as competitive as online retailing, the few extra seconds can amount to a fortune in lost sales.

Why is latency important?

As more companies undergo digital transformation, they use cloud-based applications and services to perform basic business functions. Operations also rely on data collected from smart devices connected to the Internet, which are collectively called the Internet of Things. The lag time from latencies can create inefficiencies, especially in real-time operations that depend on sensor data. High latency also reduces the benefits of spending more on network capacity, which affects both user experience and customer satisfaction even if businesses implement expensive network circuits.

Causes of network latency

Here are the most common causes of network latency:

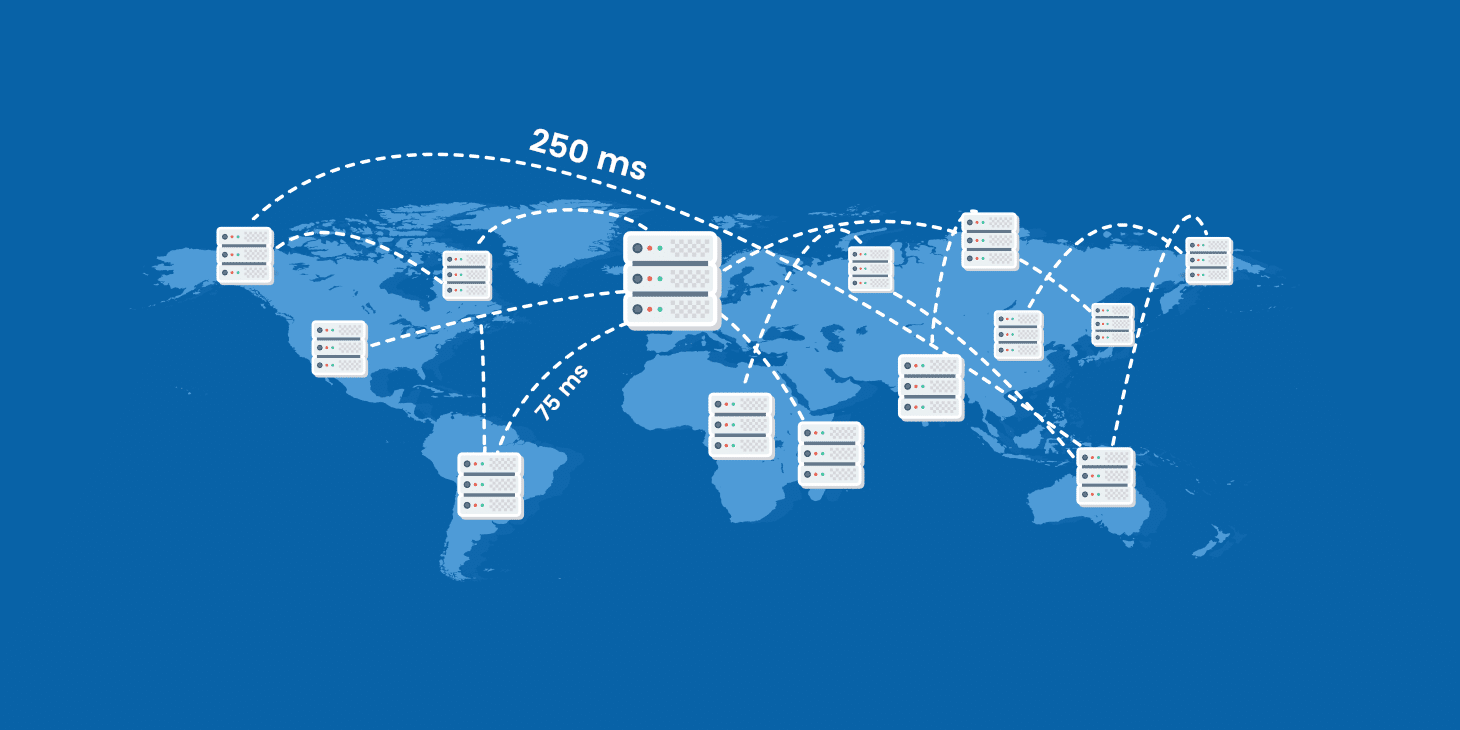

- Distance: The distance between the device sending the request and the server is the prime cause of network latency.

- Heavy traffic: Too much traffic consumes bandwidth and leads to latency.

- Packet size: Large network payloads (such as those carrying video data) take longer to send than small ones.

- Packet loss and jitter: A high percentage of packets failing to reach the destination or too much variation in packet travel time also increases network latency.

- User-related problems: A weak network signal and other client-related issues (such as being low on memory or having slow CPU cycles) are common culprits behind latency.

- Too many network hops: High numbers of network hops cause latency, such as when data must go through various ISPs, firewalls, routers, switches, load balancers, intrusion detection systems, etc.

- Gateway edits: Network latency grows if too many gateway nodes edit the packet (such as changing hop counts in the TTL field).

- Hardware issues: Outdated equipment (especially routers) are a common cause of network latency.

- DNS errors: A faulty domain name system server might slow down a network or lead to 404 errors and incorrect pathways.

- Internet connection type: Different transmission mediums have different latency capabilities (DSL, cable, and fiber tend to have low lag (in the 10-42ms range), while satellite has higher latency).

- Malware: Malware infections and similar cyber attacks also slow down networks.

- Poorly designed websites: A page that carries heavy content (like too many HD images), loads files from a third party, or relies on an over-utilized backend database performs more slowly than a well-optimized website.

- Poor web-hosting service: Shared hosting often causes latency, while dedicated servers typically do not suffer lag.

- Acts of God: Heavy rain, hurricanes, and stormy weather disturb signals and lead to lag.

Latency vs. Bandwidth vs. Throughput – What Is the Difference?

Latency, throughput, and bandwidth are all connected, but they refer to different things. Bandwidth measures the amount of data that can pass through a network at a given time. A network with a gigabit of bandwidth, for example, will often perform better than a network with only 10 Mbps of bandwidth.

Throughput refers to how much data can pass through, on average, over a specific period. Throughput is impacted by latency, so there may not be a linear relationship between how much bandwidth a network has and how much throughput it is capable of producing. For example, a network with high bandwidth may have components that process their various tasks slowly, while a lower-bandwidth network may have faster components, resulting in higher overall throughput.

Latency is a result of a combination of throughput and bandwidth. It refers to the amount of time it takes for data to travel after a request has been made. With more or less bandwidth or throughput, latency increases or decreases accordingly.

How to measure Network Latency

There are two ways of measuring latency – either as the Round Trip Time or the Time to First Byte.

Round Trip Time (RTT) refers to the time a data packet takes to travel from the client to the server and back. On the other hand, Time to First Byte (TTFB) is how long it takes for the server to get the first byte of data after the client sends a request.

Network latency is measured in milliseconds. In terms of a website speed test, this speed is also often called a ping rate. The lower the ping rate, the lower the latency. A reliable network will typically have an acceptable ping rate that ranges from 50 to 100 milliseconds. Under 50 is considered a good and low ping rate. Conversely, network latency of over 100 milliseconds falls under the high ping end of the spectrum.

Tips for Reducing Network Latency

If you’re experiencing a slow network or lag, you may have high network latency. These are some methods you can try to reduce your network latency and improve your experience:

Measure your network latency

Before you try methods for reducing your network latency, you may want to ensure latency is the real problem. To determine if your latency is the issue, search the internet for latency-measuring tools and use one. Typically, functional network latency is anything less than 100 milliseconds, and between 20 and 40 milliseconds is good for most purposes.

Use a wired connection

Determine if all devices are experiencing network latency or if it’s a certain device you use. If only one or a few devices have high latency, consider using a wired connection for those devices. In most cases, you can connect an Ethernet cable from the modem to the device to provide direct network access.

Eliminate background operations

Depending on your network’s capabilities, your device may be attempting to operate too many programs or functions at once. If you have many devices connected to the network, like smart home devices or consoles, consider disconnecting a few. You can also check the background functions on your device to ensure other operations are not increasing the latency.

Update your internet plan

If you’ve attempted other methods for improving network latency and find that it’s still high, consider speaking with your internet provider. A telecommunications consultant may be able to identify and resolve other issues causing the high latency. If there are no other issues affecting the network latency, you may need to upgrade your plan to improve your internet experience.

Move closer to the router

If you are using a wireless connection and experiencing high network latency, consider moving closer to the router. The signal from your router may weaken the further your device is from it. If you can’t move your router, consider buying network-boosting devices to strengthen the connection over distances.

Conclusion

Network latency is the time it takes for a data packet to travel from the client device to the server and back. Lower latency means faster data transmission and website speed. Several factors influence latency, such as distance, web page weight, and transmission software and hardware. High latency occurs due to a variety of causes, from DNS server errors to end-user device issues. Some ways to reduce network latency include using a CDN, reducing external HTTP requests, and implementing pre-fetching techniques in the website code.