What is Docker?

The word “Docker” refers to several things, including an open-source community project; tools from the open-source project; Docker Inc., the company that primarily supports that project; and the tools that the company formally supports. The fact that the technologies and the company share the same name can be confusing.

What is Docker?

Docker is a tool that enables you to create, deploy, and manage lightweight, stand-alone packages that contain everything needed to run an application (code, libraries, runtime, system settings, and dependencies). These packages are called containers.

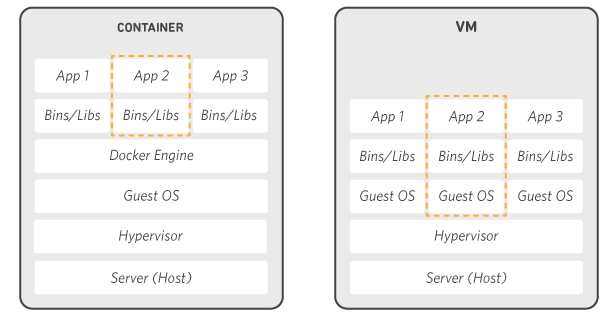

Each container is deployed with its CPU, memory, block I/O, and network resources, all without having to depend upon an individual kernel and operating system. While it may be easiest to compare Docker and virtual machines, they differ in the way they share or dedicate resources.

Containers help expand your Linode’s functionality in several ways. For example, you can deploy multiple instances of Nginx with multiple stagings (such as development and production). Unlike deploying multiple virtual machines, the deployed containers will not tax your Linode’s resources.

Who uses Docker?

Docker is an open application development framework that’s designed to benefit DevOps and developers. Using Docker, developers can easily build, pack, ship, and run applications as lightweight, portable, self-sufficient containers, which can run virtually anywhere. Containers allow developers to package an application with all of its dependencies and deploy it as a single unit. By providing prebuilt and self-sustaining application containers, developers can focus on the application code and use it without worrying about the underlying operating system or deployment system.

Additionally, developers can leverage thousands of open-source container applications that are already designed to run within a Docker container. For DevOps teams, Docker lends itself to continuous integration and development toolchains and reduces the constraints and complexity needed within their system architecture to deploy and manage the applications. With the introduction of container orchestration cloud services, any developer can develop containerized applications locally in their development environment, and then move and run those containerized applications in production on cloud services, such as managed Kubernetes services.

How do containers work? And why are they so popular?

Containers are made possible by process isolation and virtualization capabilities built into the Linux kernel. These capabilities – such as control groups (Cgroups) for allocating resources among processes, and namespaces for restricting a processes access or visibility into other resources or areas of the system – enable multiple application components to share the resources of a single instance of the host operating system in much the same way that a hypervisor enables multiple virtual machines (VMs) to share the CPU, memory and other resources of a single hardware server.

As a result, container technology offers all the functionality and benefits of VMs – including application isolation, cost-effective scalability, and disability – plus important additional advantages:

- Lighter weight: Unlike VMs, containers don’t carry the payload of an entire OS instance and hypervisor; they include only the OS processes and dependencies necessary to execute the code. Container sizes are measured in megabytes (vs. gigabytes for some VMs), make better use of hardware capacity, and have faster startup times.

- Greater resource efficiency: With containers, you can run several times as many copies of an application on the same hardware as you can using VMs. This can reduce your cloud spending.

- Improved developer productivity: Compared to VMs, containers are faster and easier to deploy, provision, and restart. This makes them ideal for use in continuous integration and continuous delivery (CI/CD) pipelines and a better fit for development teams adopting Agile and DevOps practices.

Docker advantages

Docker containers provide a way to build applications that are easier to assemble, maintain, and move around than previous methods allowed. That provides several advantages to software developers.

Docker containers are minimalistic and enable portability. Docker lets applications and their environments be kept clean and minimal by isolating them, which allows for more granular control and greater portability.

Docker containers enable composability. Containers make it easier for developers to compose the building blocks of an application into a modular unit with easily interchangeable parts, which can speed up development cycles, feature releases, and bug fixes.

Docker containers ease orchestration and scaling. Because containers are lightweight, developers can launch lots of them for better scaling of services. These clusters of containers do then need to be orchestrated, which is where Kubernetes typically comes in.

Conclusion

As noted above, Docker allows more applications to run on other hardware than other technologies with the same hardware, making it easier to build and manage applications.

In the end, we suggest that if you are also interested in new technologies and have already used Docker, share your helpful experiences with us and other users.